- Published on

Trawler - A New Data Catalogue

- Authors

- Name

- Alex Sparrow

- alex@scalar.dev

Trawler - A New Data Catalogue

Known variously as data catalogues or sometimes more abstractly metadata platforms, a number of tools have emerged in the last few years to discover, track, link and monitor data assets within an organisation. The vision behind adopting a data catalogue is to empower users across an organisation to track down and understand what datasets (and other data resources) are available and how they are processed and used. Among the open source offerings are datahub and open-metadata. You can find a load more offerings, open source and proprietary, here.

What does a data catalogue do?

Generally quite a lot. You'll find most of the offerings have some variation on the following set of core features:

- Collect metadata from data sources, dashboards and ML models, typically using a set of connectors to different kinds of data systems (e.g. SQL databases, S3, Kafka, Spark etc.)

- Allow users to search and discover datasets along with their core metadata.

- Allow users to understand the lineage of a given dataset (where it was produced from and how it was processed).

On top of this, some platforms might also support:

- Social features: commenting, tagging, sharing and generically curating insitutional knowledge around data assets.

- Data observability: recording changes to datasets and detecting potential issues.

What's missing in existing solutions

Whilst existing tools are undoubtedly powerful and have done a lot to advance the state-of-the-art, we've found them lacking in a few ways.

Firstly, many require a very significant up-front investment to use. Often this is just in terms of technical deployment complexity - for instance datahub has approximately 10 separate services in a minimal deployment. open-metadata does a little better requiring only postgres and elasticsearch. For a large organisation, this might well be fine but for a small startup, it's a large barrier to adoption.

Additionally, all of these tools are based on mutually incompatible metadata formats. This makes it difficult to mix and match tooling and to allow different teams to instrument and catalogue their data independently. As of this writing, none of the open source offerings support existing standards for data catalogs such as dcat.

A related problem is that many offerings are difficult to extend with new data asset types or custom metadata. Whilst open-metadata is at least based upon well-documented JSON schemas, datahub makes use of a fairly esoteric schema description language from linkedin.

How is trawler different?

We started building trawler to experiment with some new ideas for building a data catalogue that can scale from small teams to large organisations. These are:

Easy deployment.

You should be able to deploy trawler in minutes not hours or days. The most basic configuration requires only a postgres database.

Compatible

We intend to support existing ingestion formats where possible (datahub is partially supported and we'd like to add open-metadata in future). This will allow users to take advantage of a large ecosystem of existing data connectors and save us duplicating this existing work.

Standards compliant

As well as support for existing ingest formats, we plan to support dcat and prov via JSON-LD for metadata consumers. Whilst these, being sematic web standards can be a little scary at first, we realised that semantic web protocols have solved a number of problems around annotating a knowledge graph (which is fundamentally what a data catalogue is) with rich and extensible metadata.

Whilst we unfortunately won't be able to implement semantic web standards totally within how we represent data internally, we can take some of the ideas including how types and attributes are namespaced using URIs and how entities are referenced using URNs.

Federation

ActivityPub has emerged recently as a means of building decentralised social networks and has already started to see use within code collaboration. We believe the next opportunity here is for data and metadata. Why?

Federation allows gradual adoption of data catalogues within larger organisations where individual teams can start to collect metadata and then share it within the wider organisation. If different teams want to use different tools, they can.

Federation allows organisations to share metadata and publish updates over time. If organisation A wants to share a dataset with organisation B, they should be able to connect their data catalogues together.

Public and open source data. As an extreme case, a government body might publish a dataset which is then consumed and processed by interested parties. Federation should support a derived dataset "following" the upstream dataset and receiving updates similar to how a fork and an upstream might work in the context of something like github.

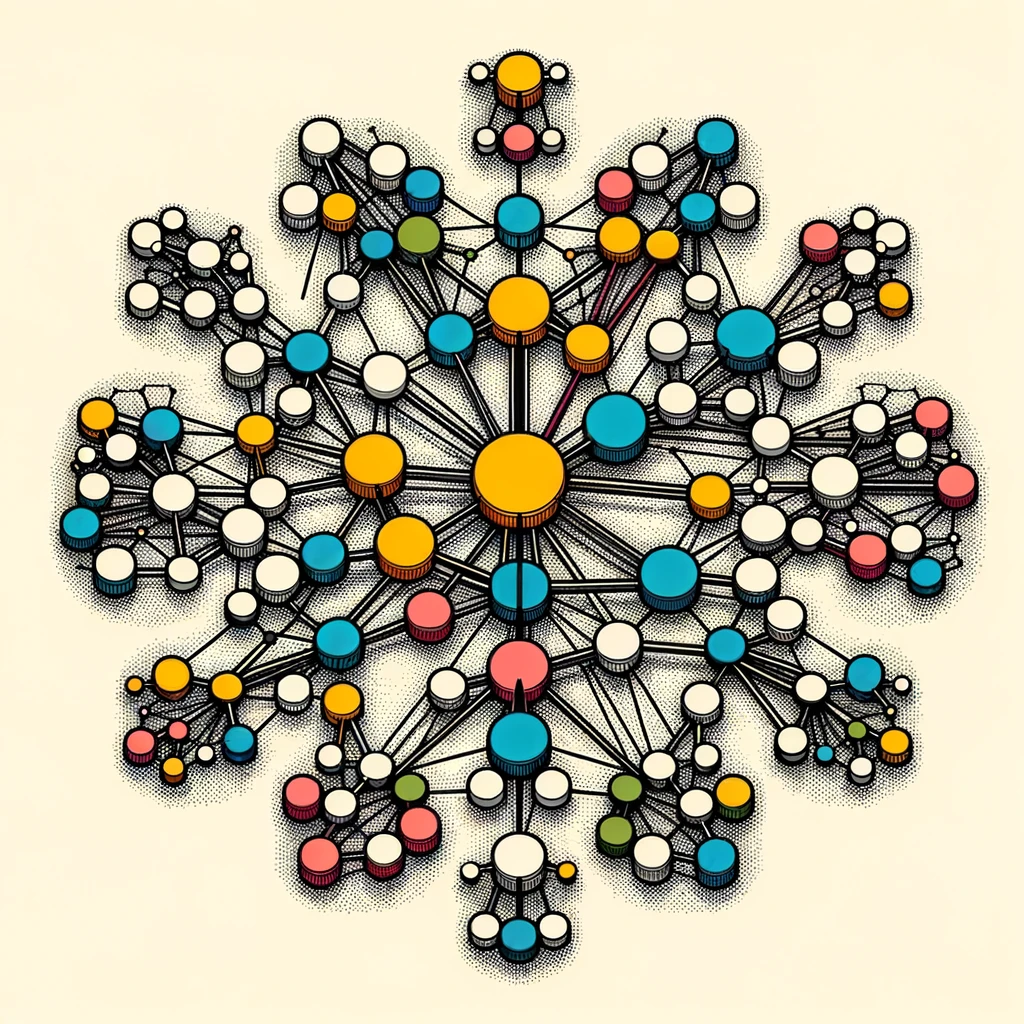

Clearly, some of these goals are likely to be challenging but we see data catalogues evolving to look something like a federated knowledge graph. You might imagine a graph being decomposable into a number of "cliques" which are highly connected between themselves but only sparsely connected to other bits of the graph. For instance a team might combine and enhance a large number of raw datasets but produce only a relatively small number of "data products" consumed by other teams. In the context of federation, these cliques might be hosted in independent data catalogues.

Where next?

So clearly this is an ambitious set of features, where do we start? Our initial goal is to produce an initial version of trawler with the following feature set:

Useful datahub compatibility. You should be able to ingest most data sources to a reasonable level of fidelity using the existing

datahubcli.A complete but alpha-quality data model. We'll write more about

trawler's data model in an upcoming article but getting the representation and semantics correct is an important challenge.A working (but limited) UI. For initial releases, we'll make sure that metadata is exposed in the UI but don't plan to implement much in the way of interaction initially.

Semantic web API for accessing stored metadata. Since federation will be based upon how we can represent metadata and exchange it between instances, we need to figure out how to represent these data in a way that can be used across implementations.

That's all for now folks. Thanks for reading and look out for our follow up article where we will discuss trawler's architecture. For now, feel free to star us and follow along on github.